Prompt Engineering with Gemini API: Not Magic, Just Method

How to turn Gemini into a backend that thinks—and returns JSON.

Let’s get one thing straight—this isn’t another “how to talk to ChatGPT” cheat sheet.

We're building real software using the Gemini API, configuring LLM prompts, and generating structured JSON outputs from generative models.

This is prompt engineering for developers — to power real-world apps, tools, and LLM API workflows.

🧭 Quick Summary

For the impatient builder

This article isn’t about “chatting with AI.” It’s about building real software using Gemini’s API.

Learn 7 core prompting techniques (Zero-shot, Chain-of-Thought, ReAct, and more).

Follow 3 working API examples with structured output using Pydantic.

See what broke, what worked, and how to debug Gemini like a dev.

Get inspired with real-world use cases and my personal takeaways from Kaggle's GenAI training.

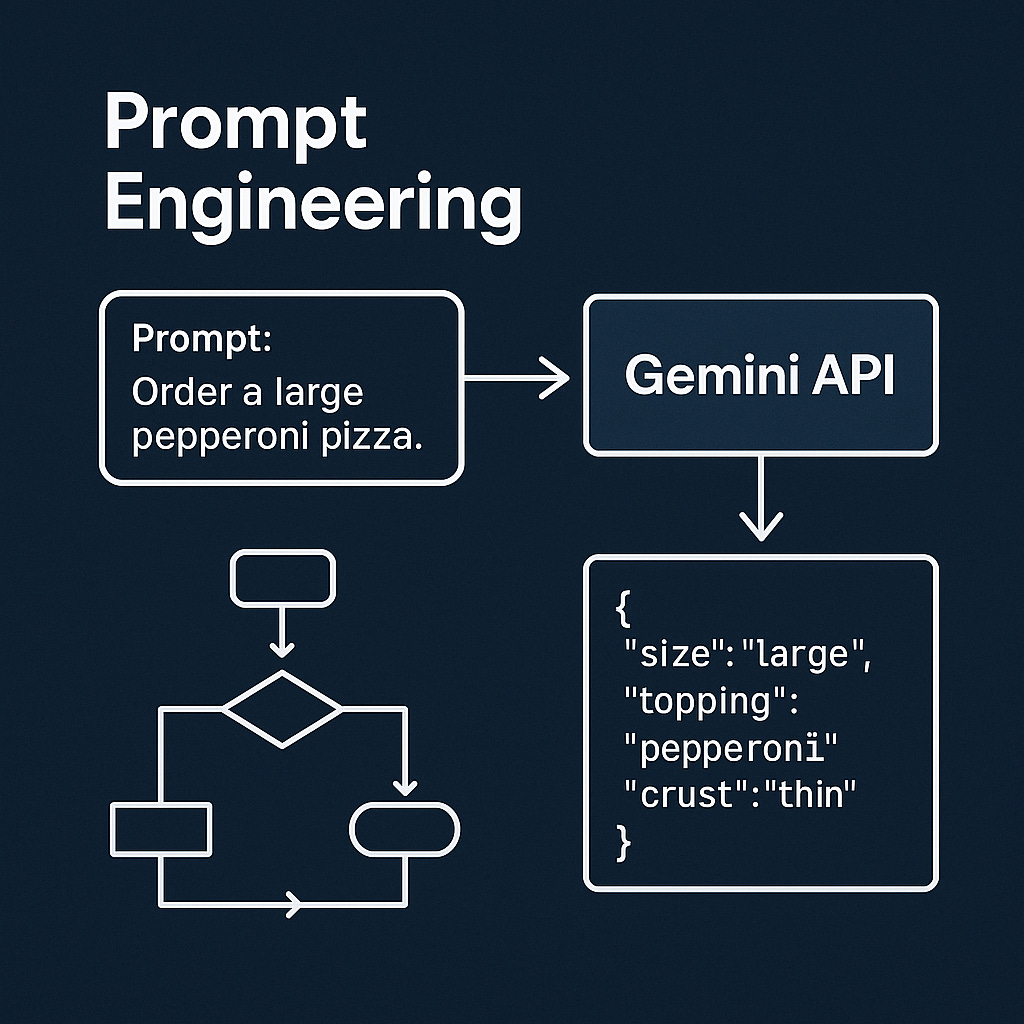

What is Prompt Engineering, Really?

Large Language Models (LLM) are just probability machines. They predict the next token based on your input prompt. (I’ll explain how tokens work in my next article in this series.) That prompt can be vague and fluffy, or it can be structured, layered, and tuned to give you exactly what you want. That’s where prompt engineering becomes a craft.

And when you're calling the model via API? It becomes even more real: the config you pass matters just as much as the words in your prompt. You want reproducibility, reliability, and structure? You’d better speak the model’s language and its parameters.

Prompt Engineering Techniques: 7 Methods to Power LLM API Workflows

Let’s break down some of the most useful prompt patterns I encountered and tested:

🤔 Zero-shot, One-shot, Few-shot

What is it?

Zero-shot: "Just do the task."

One-shot: "Here’s one example. Now do it."

Few-shot: "Here are a few examples. Get the pattern."

When to use it?

Start with zero-shot for simple tasks.

Move to few-shot when the task involves structure or nuance.

Quick take: Few-shot prompting is like giving the model a cheat sheet before the exam.

👨💼 System / Role / Contextual Prompting

What is it? Set the tone or role for the model: "You are a medical assistant..."

When to use it?

When you need domain-specific or styled answers.

Why it matters: Telling the model who it is helps constrain what kind of answers it gives.

🛀 Step-back Prompting

What is it? Ask the model to reflect or generalize before jumping into a solution.

Example: "What are the key steps needed to solve this problem? Now, apply them."

When to use it?

For planning, strategy, summarization, and even debugging.

⛓️ Chain-of-Thought (CoT)

What is it? Guide the model through a reasoning path before delivering the answer.

When to use it?

Math, logic, decision trees.

Pro tip: Use structured outputs to capture reasoning, not just the final answer.

🔄 Self-consistency

What is it? Run the same prompt multiple times and compare outputs.

When to use it?

Tasks where coherence varies from run to run.

Gemini-specific note: Requires sampling + looping on your end (not yet built-in).

🌳 Tree of Thoughts (ToT)

What is it? Generate multiple ideas/branches, evaluate them, and converge.

When to use it?

Planning, ideation, creative writing.

🧹 ReAct (Reasoning + Action)

What is it? Let the model reason and decide on next actions (think: mini agent).

When to use it?

When building tools with memory, tools, or APIs.

Real Gemini API Examples: From Prompt to JSON with Pydantic

Most people think prompt engineering is just about clever wordplay in a chatbot. Nope.

When you're calling Gemini via API, prompt engineering becomes something else entirely: It’s code. It’s config. It’s structure. And if you don’t do it right, the model won’t do what you want.

Example 1: Structured Product Request (with Pydantic)

from pydantic import BaseModel

class ProductRequest(BaseModel):

product: str

size: str

color: str

prompt = "I’d like to buy a medium red hoodie, preferably in organic cotton if available."

config = types.GenerateContentConfig(

temperature=0.2,

response_mime_type="application/json",

response_schema=ProductRequest

)

response = client.models.generate_content(

model="gemini-2.0-flash",

config=config,

contents=prompt

)

print(response.text)

Output:

{

"color": "red",

"product": "hoodie",

"size": "medium"

}

Structured. Usable. Zero post-processing.

Example 2: Chain-of-Thought + Structured Reasoning

from pydantic import BaseModel

from typing import List

class MathExplanation(BaseModel):

steps: List[str]

answer: int

prompt = """When I was 4 years old, my partner was 3 times my age. Now,

I am 20 years old. How old is my partner? Let's think step by step."""

config = types.GenerateContentConfig(

temperature=0.3,

response_mime_type="application/json",

response_schema=MathExplanation

)

response = client.models.generate_content(

model='gemini-2.0-flash',

contents=prompt)

Markdown(response.text)

Output:

Here's how to solve this:

Find the age difference: When you were 4, your partner was 3 times your age, meaning they were 4 * 3 = 12 years old.

Calculate the age difference: The age difference between you and your partner is 12 - 4 = 8 years.

Determine partner's current age: Since the age difference remains constant, your partner is currently 20 + 8 = 28 years old.

Answer: Your partner is currently 28 years old.You don’t just get a number. You get the reasoning, step-by-step, cleanly formatted. Perfect for tracing logic.

Example 3: Custom Ticket Classification (the clean version)

from pydantic import BaseModel

from typing import Literal

class TicketResponse(BaseModel):

category: Literal["bug", "feature_request", "general_feedback"]

prompt = "The app crashes whenever I try to upload a photo."

config = types.GenerateContentConfig(

temperature=0.1,

response_mime_type="application/json",

response_schema=TicketResponse

)

response = client.models.generate_content(

model="gemini-2.0-flash",

config=config,

contents=prompt

)

print(response.text)

Output:

{

"category": "bug"

}

No fancy enums here — just clean literal values that Gemini can actually handle.

Want to try these prompts yourself?

Check out my working notebook on Kaggle:

👉 🔗 View the full notebook here

Feel free to remix it, build on top of it, or break it creatively.

🌟 My Favorite Hacks from the Kaggle GENAI Training

Pydantic models are magic when it comes to structured prompting

response_mime_type="application/json"is not optionalLow temperature = higher consistency (especially when you want structure)

You can break Gemini with bad schemas—and it’s your fault, not the model’s

What Broke (a.k.a. Debugging My Way to Understanding)

Working with Gemini via API sounds smooth until you realize: LLMs are chill. Type systems are not.

❌ AttributeError: type object 'MyModel' has no attribute 'model_json_schema'

What it means: You passed a TypedDict or regular Enum to the response_schema. Gemini expects a Pydantic model that can expose .model_json_schema().

Fix:

from pydantic import BaseModel

from typing import Literal

class Response(BaseModel):

category: Literal["bug", "feature_request"]

❌ ValidationError: Extra inputs are not permitted

What it means: Pydantic v2 generates enums as $defs references in JSON Schema. Gemini doesn't handle $ref cleanly yet.

Fix:

category: Literal["bug", "feature_request", "general_feedback"]

Pro Tip:

If your structured prompt raises weird exceptions, check:

✅ Are you using

BaseModel, notTypedDict?✅ Are you avoiding

Enumand usingLiteral[]instead?✅ Did you set

response_mime_type="application/json"?

My Notebook Takeaways (a.k.a. “Where Gemini surprised me”)

I’ll be honest—going into this notebook, I thought I knew what to expect. Define a prompt, tune a temperature, get a response. Done. But nope. This thing goes deeper than your average ChatGPT playground hack.

What really stood out was Gemini’s ability to generate structured output—not just text that looks like JSON, but actual Python-valid responses using BaseModel, or clean literals like "bug". That’s a game changer.

I also underestimated how config settings (like temperature, top_k, and response_mime_type) play together. You want clean, machine-usable output? You better tell the model exactly how you want it.

Once I got the right combo of prompt, schema, and config... it felt like calling a function that thinks.

How to Start Prompting Like a Pro

✅ Be specific (don’t assume the model reads between the lines)

✅ Use concrete examples (few-shot helps the model generalize)

✅ Tweak your config (temperature/top-p/stop tokens matter)

✅ Track iterations (prompt engineering is software development now)

Real-World Use Cases (a.k.a. Why This Actually Matters)

Let’s talk business. This isn’t just prompt poetry or LLM theory — this is the stuff you can build real products and profitable tools with.

Here’s what structured prompting + Gemini API unlocks:

Customer support bots that don’t just answer — they categorize, summarize, escalate.

E-commerce automations that extract size, color, material, and product types from messy text.

No-code tools that use Gemini to turn user inputs into structured JSON workflows.

Smart email classifiers that route support, sales, and feedback to the right pipeline.

LLM agents that reason through decisions, then hand your system clean, usable answers.

It’s not a toy. It’s not a trend. It’s a new layer of software — and the foundation for building production-ready AI tools and LLM-powered backend systems.

And yes — it inspires me to build great things. The ideas are already flying. If you’re building too, let’s connect. Share, learn, iterate — and stay tuned, because I’ll be sharing what I explore next.

Final Thoughts

Structured output isn’t a gimmick — it’s a productivity multiplier. Especially if you're building real tools, not just chatting.

This is where Gemini shines: bridging the gap between LLM power and predictable APIs. Once you get the schema and config right, the results are not just smart — they're usable.

=> Enjoyed this deep dive?

Subscribe to DecryptAI for more hands-on tutorials, AI news, and tools to turn models into products. And if you're building with Gemini or anything LLM-related — reply or comment. I’d love to hear what you’re working on.